Why Agentic AI?

Explore the cutting-edge world of Agentic AI, where technology transcends automation to create systems that think, learn, and adapt autonomously. This resource hub dives deep into the innovative principles behind Agentic AI, showcasing how it transforms traditional workflows into dynamic, intelligent processes. Through articles, videos, and podcasts, discover how Memra is leading the future of AI-driven decision-making and enterprise transformation.

Below, you'll find articles, videos, and podcasts that delve into how this revolutionary approach can transform your business operations.

Podcast: Overcoming AI Challenges and Exploring the Future of Agentic AI

Date: 08.20.24

In this episode, Amir Behbehani, Founder and Chief AI Engineer at Memra, discusses with host Matt Paige the hurdles AI must overcome for full enterprise integration, including data access and memory management. The conversation delves into the concept of ‘agentic AI,’ the emergent AI stack, and how Memra is pioneering these advancements. They also speculate on AI’s transformative impact on the future labor market.

Video: Demonstrating Document-to-Database Capabilities with Agentic AI

In this video, we introduce Memra agents powered by language models, designed to perform tasks while maintaining context. Unlike traditional tools, these agents don’t just retrieve information—they perform job functions. Watch as I demonstrate how an agent extracts data from a legal document and writes it to a database, showcasing the potential of these agents to streamline and automate complex tasks.

Video: AI Agents in Action—Task Delegation and Workflow Automation

In this video, we demonstrate the capabilities of Memra’s AI agent in extracting data from a document and accurately writing it to a target database. The video covers the importance of data models and metadata in ensuring precise data handling and provides a real-world example of how our agent automates complex data processing tasks.

Video: Revolutionizing Data Integration with Intelligent AI Agents

At Memra, we're redefining what's possible with intelligent agents. This demo showcases the precision and efficiency of our AI agents in extracting data from documents and writing it to target databases. From document analysis to target database identification and execution, see how our agents streamline workflows with unmatched accuracy. We also explore the role of data models, metadata, and knowledge graphs in ensuring consistent, error-free results.

The Emergent AI Stack

Date: 12.04.23

Author: Amir Behbehani

Artificial Intelligence advancements, especially with Large Language Models (LLMs) like GPT-4, are transforming traditional applications. These models have evolved from simple text processing tools to handling complex analytical tasks, significantly changing how we interact with technology. However, a crucial challenge remains: improving memory management within LLMs to fully unlock the potential of autonomous GPT agents in performing professional tasks from start to finish. These tasks may include building a software application or generating a bespoke contract.

Current AI, including autonomous agents, often face limitations in their ability to perform complex cognitive tasks such as problem-solving, mathematical reasoning, and unsupervised operations. These limitations stem from inadequate memory management, resulting in impaired decision-making, and sub-optimal analytical capabilities. This is particularly evident when attempting to perform a complex task solely based on a single prompt, as seen in autoGPT.

Note: This article is intended for AI novices and those with high-level knowledge. It aims to provide a broad understanding of the Emergent AI Stack and its significance.

AI can automate a wide range of tasks, improving human efficiency and reducing labor costs, especially in countries with high labor costs, such as the United States.

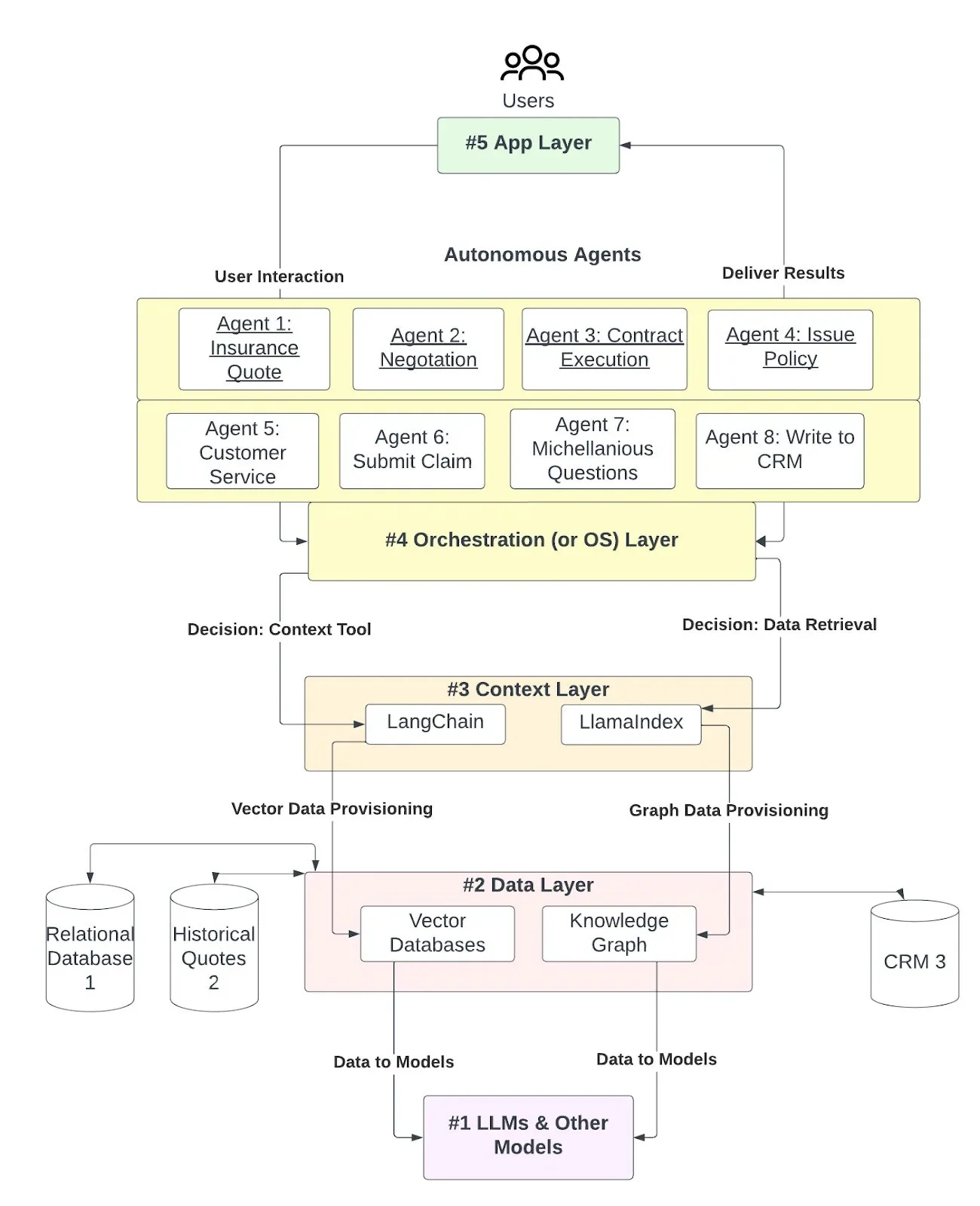

In this post, I will use the insurance industry as an example. However, this new AI technology stack can be applied to any industry vertical. The insurance industry includes specific primary characteristics and use cases, including:

Lead generation: consumers seeking to insure large purchases such as houses, cars, health/life

Insurance Quotes are generated once all necessary data are received

Negotiation and optimization of quotes

Signing of contracts

Document and policy delivery

Maintenance and retention, including claims

Let’s jump into the first and foundational layer of the new AI stack:

Layer 1: Foundation Layer — The Central Processing Unit

At the core of the AI stack is the Foundation Layer, where LLMs (Large Language Models) process and analyze vast amounts of data. These models, such as GPT and BERT, are becoming more capable of approximating general intelligence. However, they also encounter challenges, such as generating biased or factually inaccurate content. For instance, GPT-4, despite its sophistication, has demonstrated limitations in creating content that may be biased or inaccurate. This highlights the importance of ongoing advancements in this layer.

Let’s say you want to use the foundational models to interact with your content and data, particularly within the enterprise. Out of the box, these models may not perform reliably well. The results can be variable if you ask the same question 10 different ways. Additionally, if you have a large amount of proprietary content you want to feed into these models, you will need a data store to facilitate interaction with the LLMs. Relying on a prompt alone will not suffice. You’ll need to set up a vector database at a minimum and preferably a knowledge graph as well.

Foundational models have seen significant advancements in understanding input and generating output across many modalities (text, image, audio, and video). An increasing number of models also specialize in different areas, so this layer is a critical piece of our AI stack.

Layer 2: The Data Layer: AI’s Long-Term Memory

The Insurance vertical has vast data on its customers and policyholders, and these datasets typically sit in relational databases or PDFs. It is a prerequisite for these data sets to be readily available to be ingested into the new AI tech stack and potentially to have the AI tech stack write to these databases as prospects and customers take further actions. LLMs estimate the probability of the following best word, pixel, etc., based on their vast training data, and similarity calculations need to be stored in Vector Databases, which are distinct from standard relational databases.

A vector database can be helpful for general inquiries, such as determining the document type or whether a contract has been signed. However, a knowledge graph is better suited for retrieving more specific information, such as the dollar value of a contract or the counterparty’s title in an agreement. You can hone your responses by augmenting the LLM with these additional data sources and dynamically selecting the appropriate source based on the question type (general or specific).

Having a well-thought-out data layer is essential for storing and managing enterprise data, but it also brings challenges in data preparation, integration, and governance. Efficient data storage and retrieval are crucial, and technologies like vector databases, such as Pinecone, play a key role in achieving this. These databases are increasingly being used in AI applications for efficient storage and retrieval of data, which is especially important for large-scale AI models.

Layer 3: The Context Layer: Enhancing AI with Short-Term Memory

After the insurance data are ingested into the AI stack, you can augment your prompts with specific data. This allows the chat sessions not to lose context. Tools like LangChain and LlamaIndex can enhance the LLM’s ability to handle complex tasks and maintain context awareness. This layer acts like a computer’s RAM, providing quick access to relevant information for immediate processing.

By working with layers 1, 2, and 3, your query responses should be significantly improved compared to simply calling the GPT API. This layer can stand to benefit from some continued innovation. Current deep-learning methods face challenges in generalization, abstraction, and understanding causality, highlighting the need for more robust AI systems, especially when working with autonomous agents that automate the prompt / retrieval dynamic.

Layer 4: The Operating System

Wouldn’t it be great if your application could determine in real-time which data source to call based on the question type? And what if it could do so on an as-needed basis? For instance, an insurance sales agent might not have access to a specific data source, but the underwriter does. Or, when a customer inquiries about their coverage, and it’s unclear whether it relates to house insurance or auto insurance, where should the search for data begin, or should the chatbot ask a clarifying question first? This is where the concept of memory management, akin to that in a computer’s operating system, becomes crucial.

Here, the Operating System (OS) layer of our AI architecture plays a crucial role:

OS-Driven Query Analysis: The OS layer analyzes the detail level in each query received by the autonomous agents. It determines the required depth and specificity for responses, guiding agents in providing a broad overview or a detailed answer.

OS-Guided Data Source Alignment: The OS identifies the granularity of each query and directs agents to the most suitable data source. This ensures agents access the most relevant information, from general databases for broader questions to specialized repositories for detailed inquiries.

OS-Controlled Data Source Flexibility: The OS enables agents to transition seamlessly between various data sources as the conversation evolves, maintaining the relevance and accuracy of the information.

OS-Managed Contextual Memory: The OS manages memory allocations for the agents, storing recent conversation parts in short-term memory and broader topics or user history in long-term memory for sustained relevance.

OS-Structured Conversational Progression: The OS ensures that each question and answer sequence leads the conversation toward a resolution, maintaining focus and preventing deviations from the main topic.

OS-Facilitated Socratic Engagement: The OS directs agents to use a Socratic approach for problem-solving, deconstructing complex problems into simpler components for individual addressing and collective synthesis.

OS-Enabled Comprehensive Problem Solving: Under the OS’s orchestration, autonomous agents collaboratively solve various problems, adapting to evolving requirements with minimal external direction.

These roles emphasize the critical part of the operating system (OS) in coordinating the functionality and intelligence of autonomous agents within the system.

Platforms that facilitate dynamic memory management, such as Memra, are crucial in enhancing retrieval output. These frameworks assist LLMs in determining whether the questions being asked necessitate more general or specific responses, significantly improving context and minimizing hallucinations. This is particularly important when transforming large amounts of unstructured text into datasets for quick and accurate ingestion into AI and non-AI record systems. It provides new AI system development and deployment frameworks while integrating better with existing operations.

At this layer, AI interacts with other AI (autonomous agents) that perform intermediary tasks without human intervention. AI can break down complex tasks into simpler subprocesses and assign them to different agents to complete. The results are then aggregated into a complete solution. The integration hub manages this process and ensures that the agents’ output reaches the application layer.

In the B2C context, using LLMs for tasks beyond text and image generation represents a significant leap in productivity. For example, LLMs can book flights with minimal user input. In the B2B context, particularly in the insurance industry, AI can automate processes such as generating quotes, drafting and executing contracts, and issuing policy documents with minimal employee involvement. To achieve this, it is necessary to develop an OS layer that manages the user journey, ensuring each step is completed before progressing to the next while assisting users with any questions or concerns. Initiatives like AutoGPT are working towards making this a reality. However, building the OS layer from the ground up, industry by industry, is essential.

The OS layer is an area ripe for innovation across the AI industry, and we expect to see significant developments in this area in 2024.

Layer 5: The Application Layer: AI’s Interface with Users (or other AI)

The Application Layer is where AI becomes tangible for users, simplifying complex processes into user-friendly applications. This layer is crucial for making AI accessible to many users. It transforms the underlying AI technologies into practical applications that users can interact with, thus democratizing the benefits of AI. In the insurance example, imagine a customer downloading a smartphone app or going to a webpage, where the user interacts with a chatbot to take care of all their insurance needs by simply saying what they want in plain English. No more navigating endless menus trying to find something; now we merely can ask. This front end also has a benefit to the back end too. As users interact with an AI bot and supply all the necessary information to submit a quote or a claim, we can use the front end and these other layers to convert user inputs into datasets that can be used to address customer needs more than a legacy navigation menu.

Conclusion:

We explored the different layers of the Emergent AI Stack, beginning with the Foundation Layer. In this layer, LLMs process and analyze vast amounts of data. The Data Layer is responsible for storing and managing enterprise data to enable efficient retrieval and integration with AI. The Context Layer enhances AI with short-term memory, preserving context during interactions. The OS Layer, similar to an operating system, manages memory, directs queries, and facilitates problem-solving. Lastly, the Application Layer presents AI technologies through user-friendly interfaces, making the benefits of AI accessible to all.

In this evolving landscape, the OS Layer of the Emergent AI Stack plays a crucial role, similar to how operating systems became in personal computers. Early personal computers transitioned from basic command-line interfaces to complex, user-friendly operating systems that seamlessly managed software applications, multitasking, and user interaction. Similarly, the OS Layer in the Emergent AI Stack is becoming increasingly important. It orchestrates the integration and interaction between different AI layers, manages data flow, and ensures that AI applications effectively respond to user needs.

Looking to 2024, the focus is expected to shift towards enhancing the sophistication of the OS Layer in the Emergent AI Stack. This year will likely witness significant advancements in the orchestration and management of AI systems. These developments can potentially drive the creation of even more dynamic and innovative enterprise applications than in 2023.

The Case for Vertical Integration in AI: Aligning Innovation with Enterprise Value

Date: 09.04.24

Author: Amir Behbehani

Introduction

As artificial intelligence (AI) reshapes the business landscape, a critical question emerges: How can AI startups and established enterprises best collaborate to create long-term value?

This article argues that, in most cases, AI startups are best served by integrating within larger organizations.

At the same time, enterprises benefit most from acquiring agile AI startups with deep expertise and immediately applicable technology assets. This symbiotic relationship, we contend, is superior to either entity 'going it alone.' We explore the multifaceted reasons this integration is advantageous for both parties, creating a synergy that outperforms traditional approaches of independent development or hiring from the open labor market.

AI startups and established enterprises face unique challenges in this evolving ecosystem. While startups often focus on achieving product-market fit, this paper posits that there may be better approaches for AI companies than this traditional approach. Instead, we explore the 'option premium' concept—the potential future value of a company's technology—and argue that preserving and enhancing this premium is crucial, even as startups generate revenue.

Central to our argument is the recognition that AI is better understood as a feature rather than a standalone product.

Consequently, the traditional emphasis on PMF might misalign with a startup's long-term goals. Instead, vertical integration offers a more strategic approach. By focusing on feature-market fit within established enterprises, AI startups can preserve their option premium while aligning their innovations with the Total Addressable Market (TAM) of potential acquiring entities. This strategy allows startups to leverage existing enterprise distribution channels and market presence.

For AI startups, particularly those without significant revenue, the stock price is inherently tied to this theoretical option premium. As a startup solves for product-market fit (PMF), some of this inherent option premium naturally converts to underlying equity value, like potential energy converting to kinetic energy. However, this conversion can be problematic if the underlying enterprise value is low. In such cases, the startup is better served by exercising the inherent option value through vertical integration rather than pursuing traditional PMF.

It's crucial to note that we're not discussing explicit option contracts here. Instead, we're examining a structure where the stock price isn't solely a function of profit share distilled to shareholder value, as there may be no profits to distribute. This inherent option premium reflects the market's anticipation of the startup's future success and innovation potential.

This perspective necessitates a shift from seeking product-market fit to achieving feature-market fit, a goal that inherently requires vertical integration within a firm. AI startups should focus on solving specific use cases within a vertically integrated structure rather than pursuing broad, horizontal applications that might prematurely erode their option premium.

This approach doesn't preclude revenue generation. On the contrary, targeted revenue generation can increase the option premium. However, it emphasizes that startups should not exhaust their option premium in pursuing conventional product-market fit. Instead, we advocate for a dedicated partnership with a company hosting the vertical integration, allowing the startup to focus on innovation while leveraging the partner's established infrastructure and market presence.

It's crucial to note that this approach differs significantly from that of vertical-focused AI-first companies going to market to service a specific vertical. While such companies may have disruptive technology that could eventually give them a distributional advantage, they still face the considerable challenge of solving for distribution. In contrast, our proposed integration model leverages established enterprises' existing distribution channels. This is a crucial reason we advocate for vertical integration into a going concern: the larger enterprise already has the distribution network in place, providing an immediate advantage that even highly innovative AI-first startups need help matching quickly.

The "reverse acqui-hire" strategy is particularly effective for achieving vertical integration. This approach involves larger companies hiring key personnel from AI startups while licensing their technology, offering a unique pathway to enhance AI capabilities across multiple layers of the stack rapidly.

This approach benefits both startups and enterprises:

Startups can maintain strategic flexibility while developing AI technologies that complement and enhance existing enterprise structures.

Enterprises can deeply integrate AI into their operations, maximizing efficiency, accelerating innovation, and creating new avenues for value creation within their established markets.

To fully appreciate the importance of vertical integration in AI, it's essential to understand two key concepts:

Vertical Integration in AI: A strategy where a company controls multiple layers of the AI development and deployment process, from foundational infrastructure to end-user applications. This approach often involves owning or closely managing various components of the AI stack rather than relying solely on external vendors or partners.

The AI Stack: A layered architecture of AI systems, typically consisting of:

A Foundational Layer with Large Language Models (LLMs)

A Retrieval-Augmented Generation (RAG) Layer

A Context Refinement Layer

An Agentic Framework Layer

An Application Layer

By exploring these aspects, we aim to provide a comprehensive view of why vertical integration of AI within existing business structures is imperative for long-term success in an increasingly AI-driven future. This strategy allows companies to fully harness AI's potential, creating powerful feedback loops and network effects that can reshape entire industries.

It's crucial to note that this paper advocates for vertical AI integration less so within AI-specific companies and more within existing companies that already manage a comprehensive business stack. The goal is to seamlessly incorporate the AI stack into the broader business stack, enhancing and transforming existing operations rather than creating isolated AI entities. This approach ensures that AI capabilities are deeply woven into the business fabric, driving innovation and efficiency across all levels of operation.

By vertically integrating AI within established business structures, companies can leverage their strengths while developing cutting-edge AI capabilities, positioning themselves for success in an increasingly AI-driven future. In the following sections, we will delve into how companies can strategically integrate these layers to maximize the value of their AI investments and position themselves for long-term success.

The following diagram illustrates the key concepts and their relationships in our argument for vertical integration in AI, culminating in the reverse acqui-hire strategy. It visually represents how foundational AI concepts, business factors, and industry challenges converge to support this strategic approach, ultimately leading to successful AI integration and preparedness for an AI-first future.

The Evolution from Machine Learning to AI

The early 2010s saw unprecedented excitement around machine learning in Silicon Valley. Startups proliferated, each promising to revolutionize industries with sophisticated algorithms. However, this period also revealed crucial lessons about integrating intelligent technologies into business ecosystems.

Key Developments:

1. Initial Enthusiasm: Venture capital flowed freely into machine learning startups that offered services across various sectors.

2. Market Reality Check: Many of these companies faced difficulties, either folding or being acquired by larger entities.

3. Strategic Shift: There was increasing pressure to adopt industry-specific go-to-market strategies, focusing on sectors where machine learning could thrive as a standalone product.

4. Integration Imperative The market underscored the need to integrate machine learning within existing, industry-specific systems rather than adopting a horizontal, one-size-fits-all approach.

Critical Lesson: Machine learning proved most valuable when integrated as a feature within broader solutions rather than a standalone product. Companies that thrived embedded machine learning capabilities into existing products or services, enhancing their value proposition.

Parallel with the Current AI Landscape: Today's AI excitement mirrors the early days of machine learning, though with even greater intensity and scale. However, the lessons learned from machine learning suggest a different approach for AI:

1. Vertical Integration: Instead of positioning themselves as standalone AI companies, companies should focus on vertical integration within existing companies.

2. Deep Embedding: This requires deeply embedding AI into an organization's operations to maximize its value and align it closely with business needs.

3. Ecosystem Integration: Like the struggles of standalone machine learning companies, AI companies that fail to integrate with larger ecosystems may encounter significant challenges.

The evolution from machine learning to AI represents more than a technological advancement; it marks a fundamental shift in how intelligent systems are conceptualized and implemented within business contexts. This shift highlights the importance of vertical integration in AI, where the technology becomes an integral part of the entire business stack, from foundational infrastructure to end-user applications.

By understanding the lessons from the machine learning era, companies can avoid the pitfalls of treating AI as a standalone product. Instead, they can harness AI's full potential through deep, vertical integration across their operations. This strategy maximizes the value of AI investments and enables companies to develop more comprehensive, AI-enhanced solutions that closely align with specific business needs and market demands.

AI as a Feature, Not a Product

Viewing AI as a feature rather than a standalone product fundamentally reshapes AI business models. This perspective challenges companies to move beyond isolated AI solutions and instead focus on how AI can enhance existing products, services, and processes. However, integrating AI as a feature presents its challenges. Chief among these is the initial resistance to incorporating AI features into established systems, often because it requires a paradigm shift—from traditional product development to a more experimental, iterative approach.

Integrating AI as a feature requires adopting a 'design of experiment' mindset, a concept many companies find challenging. This approach involves identifying specific business problems that AI could address, assessing the available data, developing experimental models in controlled environments, and continuously refining the AI feature based on the outcomes. This mirrors the challenges encountered during the early days of machine learning integration, which also required a multidisciplinary, Socratic approach and often necessitated expert guidance.

A critical difference between developing AI and traditional machine learning systems is how they handle inputs and outputs. While machine learning typically begins with deterministic inputs and produces stochastic outputs, AI systems often start with stochastic outputs from models like LLMs (Large Language Models). These outputs are inherently unpredictable, making it crucial to engineer systems that can provide more deterministic, controlled inputs when needed while still maintaining the flexibility required for reasoning.

This process requires meticulous system design, where attention to detail, rigorous quality assurance, and tight feedback loops between problem identification and solution deployment are crucial. Without these elements, incorrect solutions could propagate throughout the system, leading to suboptimal or inaccurate outcomes. Employing a 'design of experiment' methodology is essential here, ensuring that AI integration addresses the correct problems and enhances overall system reliability and effectiveness.

Consider a real-world example: a company currently employing staff to process documents, such as contracts, for due diligence or approval. Integrating AI into this workflow requires thorough process mining to understand the end-to-end workflow, designing an AI solution around this specific use case, iterating on the code to ensure the AI's output matches or surpasses human performance, and maintaining reliability and accuracy. The challenge lies in developing the AI capability, seamlessly integrating it into existing processes, and ensuring it meets or exceeds current standards.

Successfully integrating AI as a feature often requires leaders who can navigate the complexities of AI integration, bridge the gap between technical capabilities and business needs, guide the 'design of experiment' processes, and help companies overcome resistance to change by adopting new ways of thinking about their processes and products.

When successfully implemented, AI can significantly enhance existing products and services by improving efficiency, automating complex tasks like document processing, and dramatically reducing processing times and labor costs. Well-designed AI systems can also enhance accuracy by minimizing human error in data entry and analysis. Additionally, AI features can improve scalability, enabling businesses to handle increased workloads without a proportional rise in staff. Perhaps most excitingly, AI can introduce new functionalities previously impractical or impossible with human-only processes.

Successful AI integration often begins with a narrow, well-defined use case. This approach facilitates more manageable development and integration, allows straightforward measurement of success and ROI, and enables quicker iterations and improvements. By focusing on specific, high-value use cases, companies can build the confidence and expertise needed for broader AI initiatives, ultimately demonstrating AI's value as a feature and paving the way for broader adoption and integration.

As companies become more adept at integrating AI features, we can expect a shift from viewing AI as a novel addition to recognizing it as a fundamental capability. This evolution will likely mirror the trajectory of other technologies, such as the internet or mobile capabilities, which transitioned from novel features to essential components of modern business operations.

The AI Stack and Vertical Integration

The AI stack comprises five interconnected layers, each critical to developing and deploying sophisticated AI systems. At its foundation are large language models (LLMs) like GPT-4, which process vast amounts of data to approximate general intelligence. However, they face challenges such as potential bias and inaccuracies. Building on this, the Data Layer acts as AI's long-term memory, utilizing advanced technologies like vector databases to efficiently store and retrieve enterprise data, seamlessly integrating AI with existing business processes.

The Context Layer provides AI with short-term memory, enabling it to maintain context during interactions. Tools like LangChain and LlamaIndex are essential here, enhancing AI's ability to handle complex tasks and maintain context awareness. Next, the Operating System Layer functions like a computer's OS, managing memory, directing queries, and facilitating problem-solving. It handles tasks such as analyzing query details, aligning data sources, and managing contextual memory for AI agents. Finally, the Application Layer makes AI accessible to users, transforming underlying technologies into practical, user-friendly applications. This layer acts as the interface between AI systems and users (or other AI systems).

Vertical integration across these layers offers significant advantages. Companies can customize each layer to meet their needs, potentially outperforming off-the-shelf solutions. This integration facilitates smoother data flow between layers, enhancing AI performance and creating more coherent user experiences. By reducing reliance on external vendors, companies gain greater control over their AI capabilities and can rapidly innovate, particularly in emerging areas like the Operating System layer.

However, vertical integration also presents significant challenges. Building and maintaining expertise across multiple layers requires substantial resources and a diverse talent pool. There is a risk of becoming too insular, potentially missing out on innovations from the broader AI ecosystem. Managing a vertically integrated AI stack can also be more complex than relying on specialized vendors for each layer. Nevertheless, the opportunities are immense for companies that can overcome these challenges. They can create tightly integrated AI solutions that are difficult for competitors to replicate, allowing them to respond more quickly to technological changes or market demands.

Vertical integration in AI offers a unique opportunity to harness data network effects.

As companies control more layers of the AI stack, they can create positive feedback loops—where more data leads to better AI performance, which attracts more users and generates even more data. This virtuous cycle can establish significant competitive advantages and barriers to entry for rivals. In a vertically integrated AI company, these data network effects can be even more pronounced, as the company controls data collection, processing, and utilization across all stack layers. This can lead to a compounding effect that is difficult for non-integrated competitors to match.

Strategic Considerations for Vertical Integration

Vertical integration in AI offers compelling advantages for companies with a unique combination of assets and capabilities.

Ideal candidates for vertical integration are organizations with established distribution channels, which provide an immediate avenue for scaling AI solutions.

These companies often have well-defined processes that can be transferred to AI agents, leading to rapid operational improvements. Crucially, they possess raw data and rich domain knowledge, which are essential for training and refining AI models. This creates a powerful feedback loop: as AI agents enhance processes, the improved outcomes feed back into the system, further refining the underlying models. This creates a compounding effect, where elements like distribution, process optimization, and knowledge transfer reinforce one another, creating a cycle of continuous improvement and innovation that is difficult for competitors to replicate.

Operational complexity is another crucial factor favoring vertical integration. Companies with multifaceted processes and diverse business units find numerous opportunities for AI-driven optimizations. Strong technical capabilities are also essential, enabling the effective integration and management of AI systems, ensuring these technologies are utilized to their full potential, and driving improved business outcomes.

Low-margin, high-volume businesses are particularly well-suited for AI integration.

Even small efficiency gains can significantly impact the bottom line in these environments, directly translating into shareholder value for public companies. The significant transaction volumes typical of these businesses generate vast data, fueling continuous AI model improvements and justifying the substantial investments required for comprehensive integration. The compounding benefits of increased efficiency, data-driven decisions, and innovative product offerings can create a virtuous cycle, leading to higher revenues, improved margins, and rising stock prices—solidifying vertical AI integration as a powerful strategy for maximizing shareholder value.

Industries such as retail, logistics, and manufacturing exemplify sectors where AI-driven efficiency gains can lead to substantial profitability improvements. In retail, AI can optimize inventory management and personalize customer experiences. Logistics benefits from enhanced route planning and predictive maintenance, while manufacturing can optimize production schedules and improve quality control. The potential for AI to drive efficiencies and create value makes these industries ideal candidates for vertical AI integration.

Successful vertical integration of AI can yield a wide range of operational improvements with direct bottom-line impact. AI-driven automation and optimization can significantly reduce costs across various business processes. The data-driven insights generated by AI systems can vastly improve decision-making, enhancing strategic planning and day-to-day operations. Moreover, AI integration often improves customer experiences, increasing loyalty and higher sales.

Perhaps most significantly, AI integration can unlock new revenue streams by enabling innovative products or previously impossible services. For instance, manufacturing companies might offer predictive maintenance services, or retailers could create highly personalized shopping experiences that boost customer engagement and sales.

Collectively, these factors contribute to increased shareholder value, particularly in low-margin businesses where even minor efficiency improvements can significantly impact profitability. By vertically integrating AI capabilities, companies can establish a sustainable competitive advantage that is difficult for competitors to replicate. This approach can lead to long-term market leadership and enhanced shareholder returns, positioning vertically integrated companies at the forefront of AI-driven business transformation.

Reverse Acqui-hire: A Strategic Path to Vertical Integration

Among the various strategies for achieving vertical integration in AI, the reverse acqui-hire has emerged as a particularly efficient approach. This method involves a larger company hiring a significant portion of a startup's workforce, often including crucial personnel and founders, while simultaneously licensing the startup's technology. Unlike traditional acquisitions, the startup exists as a separate entity, albeit with a reduced workforce. This approach offers unique advantages for both parties involved.

For the acquiring company, a reverse acqui-hire provides a swift and efficient means to integrate cutting-edge AI talent and technology into their existing operations. By bringing in a cohesive team that has already worked together on innovative AI solutions, the acquirer can rapidly enhance its AI capabilities across multiple layers of the stack. This influx of specialized knowledge and skills can accelerate the company's AI initiatives, potentially leapfrogging competitors in technological advancement. Moreover, by opting to license the startup's technology rather than purchasing it outright, the acquirer can avoid some regulatory scrutiny that often accompanies full acquisitions, particularly in the competitive AI landscape.

From the startup's perspective, a reverse acqui-hire offers a way to realize value based on their theoretical option premium without fully developing distribution capabilities or achieving profitability independently. This can be particularly attractive for startups that have made significant technological advancements but need help scaling or bringing their innovations to market. The licensing agreement provides a source of revenue to sustain the startup's continued operations and fund further research and development. Additionally, key team members who join the acquiring company gain access to more significant resources and a larger platform to implement their ideas, potentially seeing their innovations adopted at a scale that might have been difficult to achieve independently.

This approach fosters a unique synergy between the two entities. The startup retains its independence and the ability to continue innovating, potentially leading to future breakthroughs that could benefit the acquirer through its ongoing licensing agreement. Meanwhile, the acquiring company can deeply integrate AI capabilities into its operations, creating a more vertically integrated structure that spans from foundational AI technologies to end-user applications. This synergy can result in a powerful feedback loop, where the practical implementation of AI solutions in the acquirer's operations informs and guides the startup's ongoing research and development efforts.

The reverse acqui-hire strategy thus represents a nuanced approach to vertical integration that balances the need for rapid AI capability enhancement with the benefits of maintaining a degree of separation and autonomy for the innovative startup team. It allows both entities to play to their strengths – the startup focusing on cutting-edge innovation and the acquirer leveraging its resources and market position to implement and scale these innovations. As the AI landscape evolves rapidly, this flexible and adaptive approach to vertical integration may prove increasingly valuable for companies striving to establish or maintain leadership in AI-driven industries.

Startup Valuation and the Role of Option Premium

The valuation of AI startups, especially those that have yet to generate revenue, is often based on a theoretical option premium. This approach reflects the potential future value of the company's technology, acknowledging that many AI startups prioritize long-term innovation over immediate profitability. Instead, the primary goal for these companies is often to position themselves for acquisition rather than to achieve sustained profitability on their own. This option premium represents the market's estimation of the company's future success and potential to generate significant returns through technological innovation.

Theta decay from options pricing theory can help explain AI startup valuation dynamics in the context of vertical integration. Just as options lose value over time, the potential value of an AI startup's innovations may diminish if not realized quickly. This pressures startups to achieve significant milestones or position themselves for acquisition before their perceived option value decays. Vertically integrated companies may be better positioned to maintain their value over time as they have more control over the entire value chain and can more quickly bring innovations to market, potentially slowing the rate of theta decay.

AI startups must balance preserving their option premium with continuous innovation. This delicate balance involves advancing cutting-edge technology without prematurely scaling, which could diminish the company's perceived value. To achieve this, startups must focus on continuous innovation to maintain their technological edge, ensuring their products or services remain attractive to potential acquirers. At the same time, it's crucial to align development efforts with the needs and expectations of potential acquirers, which can help ensure that the startup's innovations are seen as valuable and relevant within the broader market.

Rather than attempting to build out full distribution capabilities, which can be resource-intensive and risky, AI startups may benefit from strategically partnering with companies that have already established distribution networks.

This approach allows startups to realize value based on their theoretical option premium rather than relying on actual revenue or profit generation. By partnering with or selling to larger companies that can integrate AI technology into their existing distribution mechanisms, startups can focus on what they do best—innovation—while leaving the complexities of scaling and distribution to more established players.

This strategy also tackles the 'difference of rates' problem: startups that secure distribution before incumbents master innovation are more likely to succeed. By aligning with companies that have strong distribution capabilities, AI startups can accelerate their path to market and increase their chances of success. This approach can be particularly effective in industries with high distribution and customer acquisition entry barriers.

However, this strategy has its risks. Startups must carefully consider the terms of any partnership or acquisition to ensure they retain sufficient control over their technology and can continue innovating. They could also become too dependent on a single partner or acquirer, limiting future opportunities. Startups must balance leveraging the resources and reach of more significant partners with preserving their identity and capacity for innovation.

Risks and Challenges of Vertical Integration

While vertical integration in AI offers numerous benefits, it also presents significant challenges that companies must navigate carefully. The cultural and technological integration required when merging AI startups with larger, established companies is a primary hurdle. The clash between a startup's innovative, fast-paced environment and a larger corporation's structured, hierarchical culture can lead to misalignments in goals, work styles, and decision-making processes, potentially hindering integration and slowing innovation. Moreover, integrating disparate technologies and systems poses technical challenges, especially when merging cutting-edge AI technologies with legacy systems in larger organizations.

Additionally, integrating disparate technologies and systems can be technically challenging, requiring careful planning and execution to ensure the combined systems function smoothly. Merging these technologies requires careful planning and execution to ensure smooth operation. The integration process may expose incompatibilities that must be addressed, further complicating the effort. This is particularly challenging with cutting-edge AI technologies that may not easily integrate with legacy systems.

Another significant risk of vertical integration is the potential for stifling innovation within the acquired AI startup. When a startup becomes part of a larger organization, it may lose the flexibility and agility that allowed it to innovate rapidly in the first place. The startup may also have less exposure to external ideas and technologies, reducing the opportunities for creative problem-solving and cross-pollination of ideas. Moreover, the startup could become complacent due to reduced competitive pressure, leading to a slowdown in the pace of innovation. There's also the risk of overlooking disruptive technologies that do not fit within the integrated model, which could leave the company vulnerable to future market shifts.

To mitigate these challenges, companies should consider maintaining semi-autonomous AI divisions within the larger organization, preserving the startup's culture and agility while leveraging the parent company's resources. Establishing clear integration roadmaps with defined milestones can help manage the process effectively, addressing cultural and technological hurdles. These roadmaps should include plans for knowledge transfer, system integration, and cultural alignment, with regular progress assessments and adjustments.

To stay at the forefront of AI innovation, companies must employ dedicated AI researchers who continuously evaluate cutting-edge developments, ensuring the team remains ahead of the curve. Maintaining a team operating at least 18 months ahead of the current technology landscape—particularly foundational model companies—is essential. Additionally, team members should focus on writing code around narrowly defined use cases, which drives operational improvements, captures insights, and fosters opportunities for new methods and technologies.

Furthermore, companies must engage with the broader AI ecosystem through partnerships, collaborations, and open innovation initiatives. This engagement helps flow new ideas and technologies into the organization, preventing the insularity that can sometimes result from vertical integration. Participating in industry conferences, supporting academic research, and engaging in open-source projects are all effective strategies for keeping the company at the forefront of AI innovation.

Finally, transforming AI research, code, and innovations into production systems requires team members who can work closely with stakeholders to translate these advancements into concrete use cases with clear operational benefits. Involving stakeholders early in the process ensures that the work on both the front and back end aligns with known operational improvements, ultimately driving the organization’s competitive edge.

The Strategic Imperative of Vertical Integration: Preparing for an AI-First Future

The future of AI in vertically integrated companies presents compelling opportunities for both startups and established enterprises. For AI startups, vertical integration through acquisition offers a rapid path to scale their technologies, leverage existing customer bases, and access substantial resources for further development. These startups can benefit from larger companies’ established distribution channels, industry knowledge, and operational expertise, potentially accelerating their growth and market impact.

Conversely, established companies that acquire AI startups stand to gain cutting-edge technologies and talent, enabling them to swiftly enhance their AI capabilities and maintain competitiveness in an increasingly AI-driven market. By integrating AI startups, these companies can infuse innovation throughout their operations, potentially leading to improved efficiency, new product offerings, and enhanced customer experiences. The synergy between the startup's agility and the established company's scale can create a powerful engine for innovation and growth.

Vertical integration in AI offers unique advantages that extend beyond simple technology acquisition. It allows companies to create tightly integrated AI solutions across their entire stack, from foundational models to end-user applications. This integration can lead to superior performance, better data utilization, and the creation of proprietary AI capabilities that are difficult for competitors to replicate. Moreover, vertically integrated companies can create powerful feedback loops, where improvements in one layer of the AI stack cascade through the entire system, leading to compounding benefits over time.

However, it's crucial to recognize that the current vertical integration landscape primarily involves large incumbents acquiring emerging AI startups. This trend is driven by the bottom-up construction of the AI stack, where the foundational layers are being established before the application layer fully matures. This evolution creates a compelling dynamic: when true AI-first companies eventually enter the market, they may embody a radically different paradigm.

These AI-native enterprises, constructed from inception with AI at their core, have the potential to disrupt entire industries, much like how Netflix revolutionized the entertainment landscape.

They will likely operate with fundamentally different organizational and technological architectures, gaining significant advantages over traditional companies that have merely augmented their existing structures with AI capabilities. This stark contrast underscores the urgency for established firms to integrate AI deeply, not just as an add-on but as a foundational element of their business model.

This situation presents both a challenge and an opportunity for today's incumbents. There is still time for established companies to integrate AI deeply into their businesses, leveraging their existing strengths to build formidable moats. By doing so, they can position themselves to compete effectively when AI-first companies eventually enter the market. However, the window for this integration is narrowing. Companies that fail to embrace comprehensive AI integration may find themselves at a severe disadvantage when facing these new AI-native competitors.

In essence, the current phase of vertical integration in AI is not just about enhancing existing businesses—it’s about preparing for a future where AI is the foundation of business operations. It’s a call to action for incumbents to reimagine their businesses through an AI lens while they can still leverage their current market positions. The alternative may be facing disruption from AI-first companies that could potentially reshape entire industries, much like the digital natives of the past two decades have done in their respective sectors.

Conclusion

Our exploration of vertical integration in AI has revealed its critical importance in shaping the future of businesses across industries. We've traced the evolution from machine learning to AI, highlighting how the lessons learned from the early days of ML inform today's AI strategies. AI as a feature rather than a standalone product has emerged as a critical paradigm shift, necessitating deep integration into existing business processes.

The AI stack's structure and importance have been central to our discussion, demonstrating how control over multiple layers can lead to significant competitive advantages. We've examined the strategic considerations for vertical integration, particularly for companies with existing distribution channels, rich data assets, and complex operations. The valuation dynamics for AI startups, centered around option premiums, underscore the time-sensitive nature of AI integration and acquisition strategies.

While we've acknowledged the risks and challenges of vertical integration, including cultural clashes and the potential for stifling innovation, we've also outlined strategies to mitigate these issues. The future landscape of AI in vertically integrated companies promises transformative potential, with the ability to create powerful feedback loops and network effects that can reshape entire industries.

The emergence of AI-first companies looms, presenting both a threat and an opportunity for today's incumbents. This underscores the urgency for established companies to act now, leveraging their current strengths to build AI-centric operations before they face disruption from new, AI-native competitors.

Vertical integration in AI is not just a strategic advantage—it's imperative for long-term success in an AI-driven future.

By integrating AI deeply into their operations, companies can build formidable competitive advantages that will be difficult for others to replicate. Companies that successfully navigate this integration by balancing innovation with practical implementation will be best positioned to thrive. The window of opportunity is open but may not remain so indefinitely. For business leaders, the time to act is now: to reimagine their operations through the lens of AI, to build or acquire the necessary capabilities across the AI stack, and to prepare for a future where AI is not just a tool but the foundation of the business itself.

The race to an AI-first future is underway. Those who embrace vertical integration in AI today are not just preparing for tomorrow—they're shaping it.